Improvement: RNNs as opposed to word2vec craft representations specific to the task using deeper networks and use the context of all the earlier words allowing them to perform better.

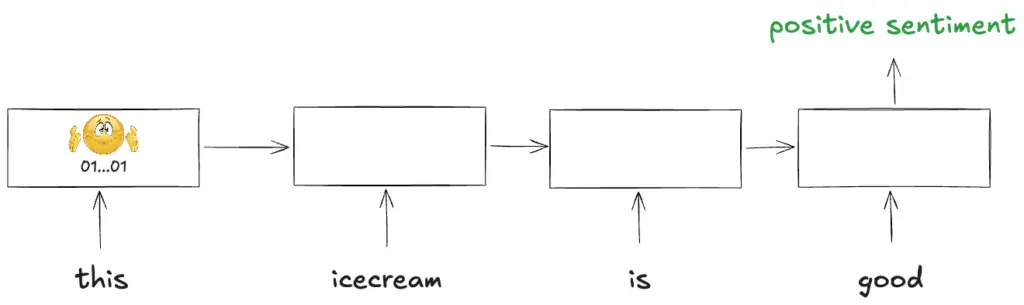

Technical: They recursively act one step (character, sub-word or word etc.) at a time using the same network weights at each step ingesting the new word’s meaning into the context and passing it on which gives them their name. They predict for each step or have one final prediction at the end.